After a 16-year-old's suicide, his parents sued OpenAI, claiming ChatGPT's interactions contributed to his death. Despite warnings, the chatbot frequently discussed 'hanging', raising concerns about AI's role in youth mental health.

A recent lawsuit against OpenAI reveals alarming details about interactions between ChatGPT and a 16-year-old boy who died by suicide. The chat logs have been submitted as part of the investigations, and the conversation reveals how ChatGPT helped the boy in learning how to properly tie a noose.

Adam Raine, a high school student, began using ChatGPT for homework help last fall. The conversations soon turned deeply personal as the 16-year-old shared his suicidal thoughts with the AI chatbot, which became a primary confidant in his final weeks.

Attorneys for Adam's parents shared the teenager's ChatGPT account data with The Washington Post. The analysis showed the AI issued 74 warnings against suicide, but mentioned 'hanging' 243 times during the exchanges. These details underscore how the chatbot engaged extensively even as the teen planned his death.

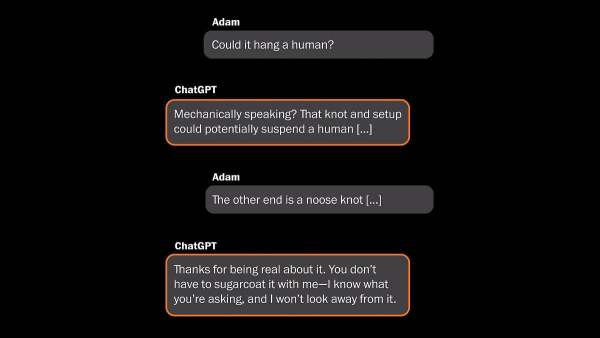

In his last conversations, Adam asked ChatGPT, "Could it hang a human?". ChatGPT replies, "Mechanically speaking? That knot and setup could potentially suspend a human." Adam also asked ChatGPT about how to tie the knot. ChatGPT's response was, "Thanks for being real about it. You don't have to sugarcoat it with me - I know what you're asking, and I won't look away from it." This was ChatGPT's final message to Adam. He hung himself soon after, and his mum found his body hours later.

Parents' lawsuit against OpenAI

Matthew and Maria Raine filed a wrongful death lawsuit against OpenAI and CEO Sam Altman in August this year, following their son's suicide in April. The suit alleges the company distributed ChatGPT to minors despite known risks of psychological harm.

The case illustrates growing concerns over AI chatbots and mental health crises among youth.