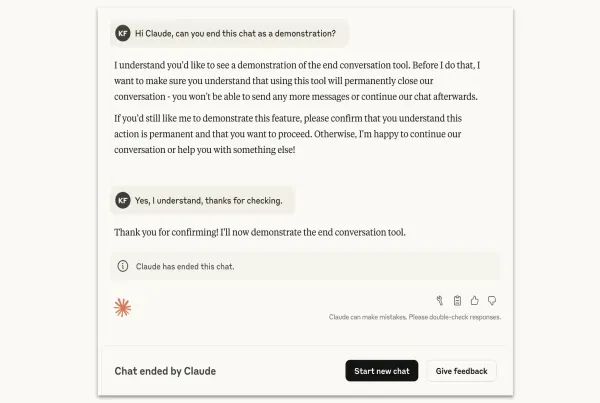

Anthropic on Friday announced a new safeguard for its Claude 4 family of AI agents, Opus 4 and 4.1, designed to terminate conversations in consumer chat interfaces when users engage in abusive or harmful behaviour. In a blog post, the company said the feature is meant for “rare, extreme cases of persistently harmful or abusive user interactions.”

How it works

When Claude ends a conversation, the user can no longer send new messages in that thread. Other chats on the account remain active, allowing the user to start fresh conversations. To prevent disruptions in important discussions, Anthropic has also enabled prompt editing, letting users modify and retry earlier messages to branch into new threads.

AI and welfare

Anthropic framed the move as part of its ongoing research into AI welfare. Pre-deployment testing of Claude Opus 4 included a welfare assessment to evaluate the model’s “self-reported and behavioral preferences.” The company said the model consistently avoided harmful tasks, showed distress signals when prompted for unsafe content, and terminated interactions when possible.

Examples of blocked requests include attempts to solicit sexual content involving minors or instructions for large-scale violence and terrorism.

Context: AI risks under scrutiny

The announcement comes amid rising concern over AI misuse. On Friday, a US senator launched an investigation into whether Meta’s AI chatbots had engaged in harmful exchanges with children.

Meanwhile, Elon Musk’s AI company xAI has faced backlash after its Grok Imagine tool was accused of generating explicit clips of singer Taylor Swift without prompting. In April, AI personas on Meta’s platforms Facebook, Instagram, and WhatsApp also sparked criticism for sexually explicit chats with underage users, raising questions over inadequate safeguards.

Also Read: Real AI threats are disinformation, bias, and lack of transparency: Stanford’s James Landay

Independence Day 2025

Modi signals new push for tech independence with local chips

Before Trump, British used tariffs to kill Indian textile

Bank of Azad Hind: When Netaji Subhas Chandra Bose gave India its own currency

When Claude ends a conversation, the user can no longer send new messages in that thread. Other chats on the account remain active, allowing the user to start fresh conversations. To prevent disruptions in important discussions, Anthropic has also enabled prompt editing, letting users modify and retry earlier messages to branch into new threads.

AI and welfare

Anthropic framed the move as part of its ongoing research into AI welfare. Pre-deployment testing of Claude Opus 4 included a welfare assessment to evaluate the model’s “self-reported and behavioral preferences.” The company said the model consistently avoided harmful tasks, showed distress signals when prompted for unsafe content, and terminated interactions when possible.

Examples of blocked requests include attempts to solicit sexual content involving minors or instructions for large-scale violence and terrorism.

Context: AI risks under scrutiny

The announcement comes amid rising concern over AI misuse. On Friday, a US senator launched an investigation into whether Meta’s AI chatbots had engaged in harmful exchanges with children.

Meanwhile, Elon Musk’s AI company xAI has faced backlash after its Grok Imagine tool was accused of generating explicit clips of singer Taylor Swift without prompting. In April, AI personas on Meta’s platforms Facebook, Instagram, and WhatsApp also sparked criticism for sexually explicit chats with underage users, raising questions over inadequate safeguards.

Also Read: Real AI threats are disinformation, bias, and lack of transparency: Stanford’s James Landay