A new wave of curiosity, concern, and conspiracy is sweeping across the internet, and it centers around a seemingly innocent image editor—Ghibli Studio Art. What began as a viral trend of dreamy, anime-style image edits reminiscent of Studio Ghibli’s enchanting films has now taken a darker turn, with social media users and some tech experts raising questions: Is Ghibli Studio Art just an image editor, or could it be a potential front for harvesting ChatGPT conversation data?

What adds to the concern is the type of data potentially being funneled through Ghibli Studio Art—especially facial images. Many users, excited to see themselves in Ghibli-style animation, uploaded personal photos, often close-up portraits, into the tool. If the tool is also requesting ChatGPT conversation histories, this creates a potent cocktail of personal facial data and personal context. Security analysts warn that, while ChatGPT itself doesn’t collect images, if such a third-party tool combines facial recognition data with conversation inputs, it could create user-specific behavioral and biometric profiles without consent. In the absence of clear disclosures or safeguards, this kind of data fusion presents serious risks in terms of privacy, misuse, and unauthorized training of new AI models.

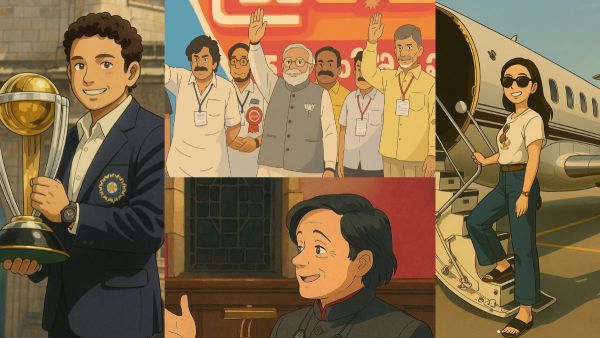

In early March, several platforms started showcasing stunning AI-generated images under the tag “Ghibli Studio Art.” These visuals captured the soft, whimsical aesthetic synonymous with the legendary Japanese animation studio. Users were offered a chance to upload photos or prompts and receive beautifully stylized transformations—anything from dreamy landscapes to portraits that looked straight out of Spirited Away or My Neighbor Totoro.

This quickly went viral. But within days, a new question was making its rounds: Why was the tool asking for ChatGPT conversations?

Screenshots began circulating on platforms like Reddit and X (formerly Twitter), with users claiming that the tool was asking them to paste their ChatGPT conversations before generating the Ghibli-style art. In some cases, users alleged the interface included an input box labeled “Paste your ChatGPT dialogue here.”

That odd request immediately triggered suspicion. While many AI image editors work off simple prompts, few, if any, ask for entire conversation histories from un platforms.

Data security experts warn this could signal a phishing technique. ChatGPT conversations can contain sensitive personal, financial, or corporate information—making them prime targets for malicious actors looking to exploit AI users who let their guard down.

As of now, OpenAI has not directly responded to the Ghibli Studio Art concerns, but security analysts are urging caution.

“This appears to be an unverified third-party tool leveraging the popularity of AI art and the trust users have in platforms like ChatGPT,” says Priya Nair, a cybersecurity analyst based in Bengaluru. “Users need to understand that your ChatGPT history may include personal or professional information you wouldn’t typically share openly. Feeding it into an unknown tool is risky.”

Some users suspect that the tool could be trying to train a new language model based on ChatGPT data—or worse, collecting proprietary or confidential data from unsuspecting users.

The larger issue here is the growing gray area around third-party AI tools. The rise of generative AI has led to a flood of web apps and image editors claiming to offer “powered-by-ChatGPT” or “Midjourney-like” experiences. Many have no verification, privacy policy, or transparency about how user data is processed, stored, or sold.

In the case of Ghibli Studio Art, no official connection has been made with Studio Ghibli, and the domain or tool itself remains elusive, with several mirror websites and Telegram bots offering similar functionality. This further complicates efforts to trace ownership or accountability.

Until more clarity emerges, experts advise users to:

• Avoid pasting ChatGPT conversations into unknown third-party tools.

• Stick to verified, transparent platforms for AI-generated art.

• Read privacy policies (if available) before sharing any personal data.

Ghibli Studio Art may have started as a magical visual experience, but it’s now casting a shadow of suspicion. Whether it’s a genuine fan-made tool gone viral or a more calculated attempt to harvest data under the veil of artistic innocence, one thing is clear: in the age of AI, even fairy-tale aesthetics can come with real-world risks.