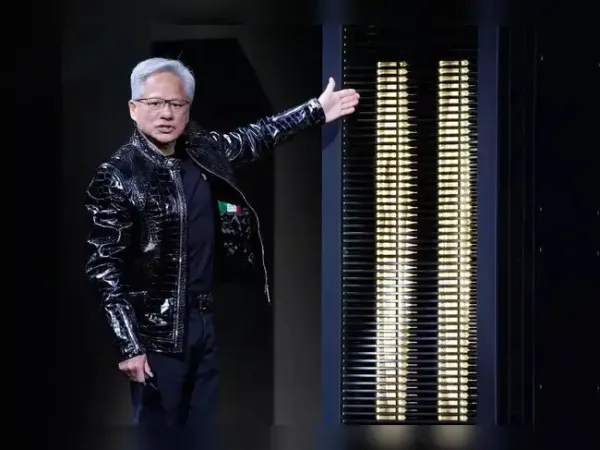

At the Consumer Electronics Show (CES) in Las Vegas on Monday, Nvidia unveiled its Rubin artificial intelligence (AI) platform, which is expected to ship in the second half of 2026, with early adoption planned by tech giants including Microsoft, Amazon, Meta and Google.

The announcement marks a shift in Nvidia's position, from a seller of powerful GPUs to now delivering fully integrated AI computing systems.

“Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof,” said Jensen Huang, founder and CEO of Nvidia, at CES 2026.

“With our annual cadence of delivering a new generation of AI supercomputers and extreme codesign across six new chips, Rubin takes a giant leap toward the next frontier of AI.”

Extreme codesign is a holistic approach where the different components (hardware, software, networking, algos, etc.) are engineered simultaneously and collaboratively.

ET breaks down why it matters for the AI ecosystem and how Nvidia stacks up against rivals in the AI infra race.

What is the Rubin platform?

Rubin is Nvidia's next-generation AI computing system designed to run the most advanced AI models efficiently at scale. Instead of a single chip, Rubin is made up of six, which includes tightly connected processors and networking components: Vera CPU, Rubin GPU, NVLink 6 Switch, ConnectX-9 Super NIC, BlueField-4 DPU, and Spectrum-6 Ethernet Switch.

While the Vera CPU works closely with AI accelerators, handling system-level tasks, the Rubin GPU is the main AI engine responsible for training large models. Switch allows GPUs to share data, ConnectX-9 speeds up communication, BlueField-4 offloads networking, security, and storage tasks, and Spectrum-6 assures optimal performance.

How is it different from the Blackwell platform?

Rubin treats AI infrastructure as one coordinated system, unlike earlier generations, where Nvidia mainly sold graphics processing units (GPUs) plugged into standard servers. Even in the recent Nvidia Blackwell platform, the GPU was the centrepiece, with CPUs, networking, and storage often supplied by different vendors.

Nvidia said Rubin delivers up to a 10X reduction in inference token cost and a 4X reduction in the number of GPUs required to train AI models, compared with the Blackwell platform.

Nvidia claims its Spectrum-X Ethernet Photonics switch systems deliver 5X improved power efficiency and uptime. While each GPU can move data quickly, Rubin can move extensive data, about 260 TB per second, which is more than what the entire internet can handle at once.

Nvidia Rubin also introduced the Nvidia Inference Context Memory Storage Platform, a new class of AI-native storage infrastructure designed to handle inference context at a gigascale.

Nvidia says Rubin is built for agentic and reasoning-heavy AI workloads. It can work across multiple steps, keep track of long conversations, and run continuously, reducing the time and energy spent on moving data around the system.

How does Nvidia stack up against rivals?

Rivals such as AMD have narrowed the gap in accelerator performance, but Rubin moves the needle into system-level integration, where fewer companies can match Nvidia's scale.

Hyperscalers, including Google’s Tensor Processing Units (TPUs), are built to support both training and real-time AI workloads, linking up to 9,216 chips in one pod. Google claims that Ironwood is nearly 30x more power efficient than its first Cloud TPU from 2018.

Similarly, AWS created its own AI training hardware, the advanced 3-nanometre Trainium3 chips and the upcoming Trainium4, to support large-scale AI workloads. AWS says Trainium3 is over four times faster than its predecessor, offers four times more memory, and uses about 40% less energy.

Trainium competes on price and efficiency within a single cloud, while Rubin focusses less on individual chip speed and more on end-to-end efficiency at scale.

Emerging AI hardware startups such as Graphcore, Cerebras, and SambaNova are offering alternative approaches, such as the intelligence processing unit (IPU), a single AI chip, etc.

Rubin is positioned as a general-purpose platform that can cater to a broad range of customers, from cloud providers to enterprises, and even AI startups.

Where Nvidia may face limitations is in custom workloads optimised for specific cloud providers. Google’s TPUs or AWS Trainium chips, for example, are extremely efficient with respect to the services and models they are designed for, and Rubin’s general-purpose design may not always outperform these specialised solutions in niche scenarios.

Additionally, the high cost and complexity of deploying fully integrated Rubin systems could limit adoption among smaller AI startups that cannot invest in full-stack Nvidia infrastructure.

Rubin’s clients

Microsoft’s next-generation Fairwater AI superfactories will feature Nvidia Vera Rubin NVL72 rack-scale systems, scaling hundreds of thousands of Nvidia Vera Rubin Superchips.

CoreWeave is among the first to offer Nvidia Rubin, operated through CoreWeave Mission Control for flexibility and performance.

Rubin is further expected to be adopted by a wide range of leading AI labs, cloud providers, hardware manufacturers, and startups, including AWS, Anthropic, Black Forest Labs, Cisco, Cohere, Cursor, Dell Technologies, Google, Harvey, HPE, Lambda, Lenovo, Meta, Mistral AI, Nebius, Nscale, OpenAI, OpenEvidence, Oracle Cloud Infrastructure, Perplexity, Runway, Supermicro, Thinking Machines Lab, and xAI, the company said in a media release.

Looking ahead

Nvidia's vertical integration spanning compute, networking, data processing, and system software increases performance predictability for large-scale deployments, while also raising migration/porting costs for customers building on Nvidia's platform.

Nvidia may have raised the stakes for rivals that must now match not only its performance but also the coherence and breadth of its full AI infrastructure stack.

Also Read || CES 2026: All you need to know about Nvidia's major announcements

The announcement marks a shift in Nvidia's position, from a seller of powerful GPUs to now delivering fully integrated AI computing systems.

“Rubin arrives at exactly the right moment, as AI computing demand for both training and inference is going through the roof,” said Jensen Huang, founder and CEO of Nvidia, at CES 2026.

“With our annual cadence of delivering a new generation of AI supercomputers and extreme codesign across six new chips, Rubin takes a giant leap toward the next frontier of AI.”

Extreme codesign is a holistic approach where the different components (hardware, software, networking, algos, etc.) are engineered simultaneously and collaboratively.

ET breaks down why it matters for the AI ecosystem and how Nvidia stacks up against rivals in the AI infra race.

What is the Rubin platform?

Rubin is Nvidia's next-generation AI computing system designed to run the most advanced AI models efficiently at scale. Instead of a single chip, Rubin is made up of six, which includes tightly connected processors and networking components: Vera CPU, Rubin GPU, NVLink 6 Switch, ConnectX-9 Super NIC, BlueField-4 DPU, and Spectrum-6 Ethernet Switch.

While the Vera CPU works closely with AI accelerators, handling system-level tasks, the Rubin GPU is the main AI engine responsible for training large models. Switch allows GPUs to share data, ConnectX-9 speeds up communication, BlueField-4 offloads networking, security, and storage tasks, and Spectrum-6 assures optimal performance.

How is it different from the Blackwell platform?

Rubin treats AI infrastructure as one coordinated system, unlike earlier generations, where Nvidia mainly sold graphics processing units (GPUs) plugged into standard servers. Even in the recent Nvidia Blackwell platform, the GPU was the centrepiece, with CPUs, networking, and storage often supplied by different vendors.

Nvidia said Rubin delivers up to a 10X reduction in inference token cost and a 4X reduction in the number of GPUs required to train AI models, compared with the Blackwell platform.

Nvidia claims its Spectrum-X Ethernet Photonics switch systems deliver 5X improved power efficiency and uptime. While each GPU can move data quickly, Rubin can move extensive data, about 260 TB per second, which is more than what the entire internet can handle at once.

Nvidia Rubin also introduced the Nvidia Inference Context Memory Storage Platform, a new class of AI-native storage infrastructure designed to handle inference context at a gigascale.

Nvidia says Rubin is built for agentic and reasoning-heavy AI workloads. It can work across multiple steps, keep track of long conversations, and run continuously, reducing the time and energy spent on moving data around the system.

How does Nvidia stack up against rivals?

Rivals such as AMD have narrowed the gap in accelerator performance, but Rubin moves the needle into system-level integration, where fewer companies can match Nvidia's scale.

Hyperscalers, including Google’s Tensor Processing Units (TPUs), are built to support both training and real-time AI workloads, linking up to 9,216 chips in one pod. Google claims that Ironwood is nearly 30x more power efficient than its first Cloud TPU from 2018.

Similarly, AWS created its own AI training hardware, the advanced 3-nanometre Trainium3 chips and the upcoming Trainium4, to support large-scale AI workloads. AWS says Trainium3 is over four times faster than its predecessor, offers four times more memory, and uses about 40% less energy.

Trainium competes on price and efficiency within a single cloud, while Rubin focusses less on individual chip speed and more on end-to-end efficiency at scale.

Emerging AI hardware startups such as Graphcore, Cerebras, and SambaNova are offering alternative approaches, such as the intelligence processing unit (IPU), a single AI chip, etc.

Rubin is positioned as a general-purpose platform that can cater to a broad range of customers, from cloud providers to enterprises, and even AI startups.

Where Nvidia may face limitations is in custom workloads optimised for specific cloud providers. Google’s TPUs or AWS Trainium chips, for example, are extremely efficient with respect to the services and models they are designed for, and Rubin’s general-purpose design may not always outperform these specialised solutions in niche scenarios.

Additionally, the high cost and complexity of deploying fully integrated Rubin systems could limit adoption among smaller AI startups that cannot invest in full-stack Nvidia infrastructure.

Rubin’s clients

Microsoft’s next-generation Fairwater AI superfactories will feature Nvidia Vera Rubin NVL72 rack-scale systems, scaling hundreds of thousands of Nvidia Vera Rubin Superchips.

CoreWeave is among the first to offer Nvidia Rubin, operated through CoreWeave Mission Control for flexibility and performance.

Rubin is further expected to be adopted by a wide range of leading AI labs, cloud providers, hardware manufacturers, and startups, including AWS, Anthropic, Black Forest Labs, Cisco, Cohere, Cursor, Dell Technologies, Google, Harvey, HPE, Lambda, Lenovo, Meta, Mistral AI, Nebius, Nscale, OpenAI, OpenEvidence, Oracle Cloud Infrastructure, Perplexity, Runway, Supermicro, Thinking Machines Lab, and xAI, the company said in a media release.

Looking ahead

Nvidia's vertical integration spanning compute, networking, data processing, and system software increases performance predictability for large-scale deployments, while also raising migration/porting costs for customers building on Nvidia's platform.

Nvidia may have raised the stakes for rivals that must now match not only its performance but also the coherence and breadth of its full AI infrastructure stack.

Also Read || CES 2026: All you need to know about Nvidia's major announcements